End Of The Year Move

December 29, 2009

First of all, thanks to everyone for a fantastic year. This blog and its readers really are a tremendous blessing to me.

Second, my stimulus jobs video made John Hawkin’s top 25 videos of the year list. And if you take out the videos that involve animals, people dressed as animals and blowing things up, I think I’m in the top 10.

And, lastly, I’ve moved my blog over to http://www.politicalmathblog.com where I’ll be posting from now on. I’ve been chaffing against the restrictions of wordpress hosting for a while, so I’ve hosting the site myself over there. I’ve also updated my “About” page to include contact information if you’d like to get a hold of me for some reason or another.

“Cash for Clunkers” – Clunker by Country Vizualization

December 23, 2009

I’m currently working on a chapter for the upcoming O’Reilly book “Beautiful Visualization” (a new book in the “Beautiful” series) and one of the things that I do is walk readers step by step through gathering data and sifting through it in order to create a visualization from the Cash for Clunkers data.

As I was looking through the Cash for Clunkers data, I was fascinated by the extent to which it seemed that the clunkers being turned in were disproportionally from companies based in the US. So I dug into the data and found out that it didn’t just seem that way… 85% of the cars “clunked” came from US based manufacturers.

So I decided to create a visualization to identify which countries gained market share due to the Cash for Clunkers program. So… here it is. Click for a larger view. (caveats below).

You can access the raw data here.

Caveats:

- Yes, nearly all Toyota and Honda and Hyundai vehicles are built in the US. I used the “where is the parent company headquartered” as my way of determining country size. That made for a more compelling image.

- It makes a certain kind of sense that people would dump a lot of old US-made vehicles because US manufacturers were at the forefront of the SUV boom in the early-mid 2000’s (aughts? oughts? naughts? This next decade will be so much easier), so it seems to make sense that people who bought SUV’s would be most eligible for a Cash for Clunkers rebate. If you bought a fuel efficient Toyota Camry in 2002, you’re not going to be eligible to trade your vehicle in, so it seem unlikely that you would do so.

With all that being said, I think it’s obvious that US manufacturers have lost market share on these transactions. I’d need to do a shade more research, but my understanding is that Ford (which didn’t take any bailout cash) didn’t do too badly while Chrysler and GM saw a large number of their vehicles turned in and comparatively very little purchasing.

What does this mean for the future? I don’t know. This was more for fun and for my book chapter than for anything else. And if you want to learn how to do something like this, just buy “Beautiful Visualization” when it comes out.

Bad Visualizations – David McCandless Lets Politics Get In The Way

December 3, 2009

Well, this makes me sad.

David McCandless runs the fantastic information visualization blog Information is Beautiful. Nearly all of his work is fantastic information visualization (his piece on drug deaths in England is really cool.

He recently created a visual about troops and troop deaths in Afghanistan. One part of the visualization got picked up by Andrew Sullivan. It was a graph on troop levels in Afghanistan and who has contributed the most troops. Mr. McCandless accompanies the chart with the words “That’s a huge amount of hired guns.”

The problem is that McCandless doesn’t source that number. I said to myself “71,700 hired guns? That seems high.” It didn’t pass the smell test.

I looked into the number. Near as I can tell, its basically a huge mistake on McCandless’ part. He didn’t source where he got the “71,700 private security contractors” stat and he didn’t say anything when I tweeted to him to ask where he got it. And he didn’t respond in the comments section of his blog when I asked. So I had to go searching for it.

It looks like the number comes from this Washington Times piece which mentions that there are 71,700 contractors, not all of whom are private security contractors. And yet McCandless not only changes this important data point (HUGE no-no in my book), he goes on to push the point with his “hired guns” comment.

Why would he do such a thing? My guess is that he doesn’t like the war in Afghanistan, so that kind of makes it OK to push a “mercenaries” point of view by lumping all contractors into the “private security” category.

Are you teaching in Kabul? You’re a “hired gun”.

Building a bridge? You’re a “hired gun”.

Flying supplies in? “Hired gun.”

Maintaining a network for the government? “Hired gun.”

Working as a translator? “Hired gun.”

It’s basically a data labeling mistake made worse by an wildly inaccurate (and, frankly, quite stupid) comment.

The reason it’s taken me so long to get to this is because I didn’t want to say anything bad about Mr. McCandless without giving him a chance to explain. It’s obvious to me that he’s not going to. If he does, I’ll post his explanation at the top of this post. But it’s given me new insight into the old saw that a lie is half-way around the world before the truth can get its pants on. Being right and being generous to others is something that takes caution and time.

(By the way, I proceeded to contact Andrew Sullivan and tell him that I thought the information was bogus. He hasn’t responded, but I figure that’s because he’s completely consumed with other correspondence. I optimistically maintain he would correct his post if he had read my note.)

Visualizing the CRU E-Mails

November 29, 2009

Very cool visualization of the Climategate e-mails over here. For more information see the Computational Legal Studies blog post.

Additionally, they have hub and authority scores for the authors of the e-mails. I like.

Thanks to Pankaj Gupta and Drew Conway for pointing me to this.

ClimateGate: Free The Data

November 25, 2009

I wanted to get this out because I’m quickly becoming consumed with other things. But I’ve been following the ClimateGate scandal for coming up on a week now. And every time I turn around it looks worse for anthropogenic global warming.

For those of you who don’t know what I’m talking about, here’s a quick summary:

Someone stole (or possibly leaked) a ton of files and e-mails from the Climate Research Unit

My position on climate change has heretofore been: “I’m not a climate scientist, but there seems to be a pretty significant agreement among those who are that the main points of climate change are solid. The earth is warming and humans are causing it to some degree. The extent to which humans are causing it (do we account for 90% of the change? 50%? 30%?) and what to do about it seems to still be a matter of debate. ”

I’ve read a number of the journal articles on the matter just because I’m interested enough in what is going on and my inclination is to get as close to the data as I can.

Because that’s my thing. Data.

Everything about data is vital to the scientific process. How we collect it, how we analyze it, how we compare different sets… these things are desperately important to good scientific work. When data gets too big, we use statistical analysis to understand it and models to predict what will happen next.

Most importantly, for science to work we need people to check our work. The next scientist down the line should be able to work his way to the same conclusion in order to be able to rely on moving toward the next conclusion. Verification is the heart and soul of the scientific process.

And the process is more important than the result. If you don’t believe me, go read up on Fermat’s last theorem. Pierre de Fermat made a conjecture in 1637 that turned out to be true, but mathematicians couldn’t prove it for over 300 years. That the conjecture was true is important, but how we know it is true is the key part.

That is why I am so pissed off at the scientists at CRU. If you read their e-mails (a good collection of what they say has been collected by Bishop Hill), they spend a ton of energy making sure other people can’t do independent verification of their data. They attack people who disagree with them, not because those people have bad data or use poor process, but because the results are not consistent with the message the CRU scientists are trying to propagate.

Add to that the fact that the CRU e-mails reveal an almost violent disregard for proper scientific peer review in favor of bullying journals into accepting only appropriate papers. And they make no bones about it: Appropriate is defined in relation to the desired result. If the result is different from what they want to hear, they worked tirelessly to politically punish people who found those results.

And we haven’t even started talking about the code.

I have a solution to this, one that I believe is non-partisan and vital to future work:

- If a paper is going to be referenced in an IPCC report, they need to post their all the data, an explanation of the process and the code for the paper where anyone can look at it and verify it.

- Any grants that are offered with federal money should require public access to the data, the process and the modeling code. If “the people” bought the research, we should be able to look at it, not just at some 10 page summary report.

- Any paper used for public policy purposes should hold the same requirement.

In short, this is a call to free the data. We can’t make decisions in the dark. If these guys have done good science, anyone with an appropriate expertise will be able to verify it.

Is this unfair to climate scientists? A violation of intellectual property?

Forgive me if I don’t give a sh**. These guys have crapped all over the scientific method and made a mockery of objective science. This kind of bad PR will take years, possibly decades, to overcome. If they want to keep their data to themselves, they can get a private firm to support their research and stop using their findings to push public policy.

Take note: This does not mean that the conclusions the CRU scientists have come to are wrong. They could be 100% right and still be huge assholes who want to hide their data from everyone else. But we have no reason to believe that they are 100% right because we can’t see the data and we don’t know their process. Just because you cheer the deaths of your opponents doesn’t make you wrong. In the future it’s going to take more to convince me than “But the scientists SAID SO!”

Also, given the blatant and horrific way in which these people have manipulated the peer review process, the “But the skeptics aren’t published in peer reviewed journals” argument is a pretty sh***y line of attack from here on out. Just from reading the e-mails, we can see that:

- That isn’t even remotely true

- Manipulation of the peer review process has been a top priority for these scientists, to the point of intentionally ruining careers and lives.

From here on out, they can have my confidence in their results when I see their data.

This is pretty funny. Or horrifying. Depends on how you want to look at it.

Several days ago, I noted on Twitter that there were a lot of “saved” jobs that weren’t saved at all but actually cost of living increases. About 24 hours after I noted this, there was an Associated Press article about that very phenomena.

Coincidence? Almost certainly. But I’ll flatter myself anyway.

But the laugh riot comes several paragraphs into the article as they look into why Southwest Georgia Community Action Council was able to save 935 jobs with a cost of living increase for only 508 people. The director of the action council said:

“she followed the guidelines the Obama administration provided. She said she multiplied the 508 employees by 1.84 — the percentage pay raise they received — and came up with 935 jobs saved.

“I would say it’s confusing at best,” she said. “But we followed the instructions we were given.”

“Confusing at best”? The multiplication of percentages is “confusing at best”? It seems obvious to me she should have multiplied 508 people by the amount the increase (.0184) and gotten 9.3. But she forgot that you have to divide the percentage by 100 before you multiply.

The fact that she had “saved” more jobs than there were people in the organization should have been a tip-off. But this is a pretty common problem with people who don’t have a very good grasp on mathematics… they don’t recognize obvious mathematical errors, they just plug in the numbers and go with whatever comes out.

And this, children, is why you pay attention at school. So you don’t get in the national news for doing something really stupid and then blame it on the instruction manual.

Dirty Stimulus Jobs Data Exaggerates Stimulus Impact

November 5, 2009

One of the key talking points for the stimulus that was passed earlier this year was that it would “save or create” jobs. Lots of jobs. Oodles of jobs. Jobs piled so high, we’ll have to hire people to dig us out of all the jobs we will have.

Or, more specifically, the Obama administration stated that they would “save or create” 4 million jobs.

This led to a great deal of mockery over the “save or create” turn of phrase, but the administration set out to actually measure the number of jobs that were saved or created by having recipients of the stimulus funds fill out a form in which they indicate how many jobs that particular chunk of the stimulus created (that form can be found here).

Now, if you look at recovery.gov, you’ll see that the stimulus has “saved or created” 640,000 jobs. That is only 16% of the promised jobs, but it’s still a pretty big number. I was curious how they got it, so I downloaded the raw data and started sifting through it. This is what I found:

- Over 6,500 of all the “created or saved” jobs are cost-of-living adjustments (COLA), which is really just a raise of about 2% for 6,500 people. That’s not a job saved, no matter how you calculate it.

- Over 6,000 of the jobs are federal work study jobs, which are part time jobs for needy students. As such, they’re not really “jobs” in the sense that most other federal agencies report job statistics (We don’t count full time college students as “unemployed” in the statistics.)

- About half of the jobs (over 300,000) fall under the “State Fiscal Stabilization Fund”, which can be described like so: Your state (perhaps it rhymes with Balicornia) can’t afford all the programs it has running, but when the state government tries to raise taxes, people yell and scream and threaten to move. The federal government comes in with stimulus funds and subsidizes the state programs. Consider this a “reach-around” tax in which the state can’t raise taxes its citizens any more, but the federal government can. So the federal government just gives the state the money to keep running programs they can’t afford on their own.

- There are, scattered hither and non, contracts and grants that state in no unclear language that “This project has no jobs created or retained” but lists dozens, if not hundreds, of jobs that have been “saved or created” by the project. It makes no sense whatsoever.

Finally, there is a statistical problem to the data here that I’ve not heard discussed at all, the problem of job duration.

Because there is no guidance in the forms on the proper way to measure “a job”, recipients are left to themselves to figure out what counts as a job. Some of them fill it out by calculating “man-weeks” and assume one “job-year” to be the measurement of a single job. Others fulfill contracts that only require two weeks, but they count every person they hire for every job to be a separate job created.

As an illustration: Let’s say you have a highway construction project in the Salt Lake City area that takes one month. A foreman is hired for the project and he brings on 20 guys he likes to work with to fill out his crew. That is 21 jobs “saved or created”. While that job is being completed, the funding if being secured for another highway construction project. By the time that funding goes through, the first project is done and they decide to just move the whole crew over to the next project. That is another 21 jobs “saved or created”.

If this happens four more times, on paper it looks like 124 jobs have been “saved or created” when in reality 21 people have been fully employed for six months. But if you judge jobs through a “man-weeks”/”job-years” lens, you have 10.5 jobs.

This is how the Blooming Grove Housing Authority in San Antonio, Texas can run a project titled “Stemules Grant” to create 450 roofing jobs for only $42 per job. My educated guess is that they hired day-laborers, paid them minimum wage or below and only worked them for a single day. Each new day brought new workers which meant more jobs “created”. Either that or they simply lied on the form. (UPDATE: USA Today interviewed the owner here. He says that he used only 5 people on the roofing jobs but that a federal official told him that his original number wasn’t right, so he adjusted it to count the number of hours worked, not the numbers of jobs created.)

Rational people can see that this kind of behavior skews the data upward. How much upward? It’s hard to say, although it is a safe bet that any project that manages to create a job for less than $20,000 is probably telling you some kind of fib.

My ultimate conclusion from looking at the jobs data is that:

- The jobs numbers reported on recovery.gov are heavily exaggerated

- The jobs numbers reported are not subjected to any scrutiny or auditing whatsoever; they are a simple data dump and therefore be seen with heavy skepticism

- The jobs numbers are a laudable transparency effort. I’m impressed that so much work has gone into trying to measure the results of the stimulus funding. Normally, these kinds of numbers would be shrouded in mystery and a normal Joe like myself would be unable to investigate them. Kudos to the Obama administration for implementing this data gathering and display initiative. However, they put too much faith in the data and statements like “The stimulus has saved or created 640,000 jobs” are uttered with a profound ignorance in the nitty-gritty details of what the data actually says.

For more interesting stimulus jobs data, you can see Paul Krugman getting angry about it here and Greg Mankiw responding to that anger here and Brad DeLong calling Allan Meltzer a shameless partisan hack about the topic over here and a story of how $900 worth of boots became 9 jobs over here. Or you can just download the jobs data and look through it yourself. There’s lots of interesting stories in there.

Are You A Real Scientist? Find Out Now!

October 19, 2009

(click for a larger view)

This chart is my social commentary on the strange attack on Stephen Dubner and Steven Levitt’s new SuperFreakonomicsbook. It seems a large chunk of people are worked up over a chapter in the book in which they look into alternate, controversial ways of solving the climate-change/global-warming/whatever-the-kids-are-calling-it-these-days problem.

At the eye of the storm are people accusing Dubner and Levitt of lies and misrepresentation in their book, particularly as it relates to their conversations with Ken Caldeira, a respected climate scientist working with Intellectual Ventures (possibly the most arrogantly named company ever).

Long story short: A blogger at Climate Progress claimed that Caldeira objected to his portrayal in the book and that Dubner and Levitt essentially flipped him off and left burning dog crap on his porch. Dubner responded that Caldeira read through two drafts of the chapter, correcting things he felt were wrong. Caldeira feels the authors worked in good faith and, while he may or may not agree with their conclusions, he feels their portrayal was fair.

What I find funny about the whole thing is the extent to which most people are blasting Dubner and Levitt even though they have explained repeatedly that they are not challenging the scientific status quo concerning climate change, that their reference to global cooling is a reference to finding ways to use technology to reverse global warming, which they unabashedly believe is happening. Their chapter looks at people working to solve the problem (you know, the problem of global warming which they believe is happening) who are working outside the “climate-change establishment”.

On a related note, Dubner has announced that he will change his name to Stephen Dubner-Yes-I-Believe-Climate-Change-Is-A-Problem.

But for the blasting of Dubner and Levitt and the (unconvincing, in my opinion) Brad DeLong’s “you should have let me write your book” post, no one can really give me a good reason to believe that they have a better grasp on the topic than Dubner and Levitt.

(Update: Greg Mankiw gives me reason to believe that Yoram Bauman has a better grasp on the topic and he seems disappointed. However, his back and forth with Steve Levitt amounted to “You may be technically right on the specifics, but the gist you gave was inaccurate.” I’m still struggling to try and reconcile that with the statements attributed to Ken Caldeira who, you may recall, previewed multiple drafts of the chapter. If he really did OK the overall scientific gist of the chapter, that strikes me as a pretty powerful authority.)

Up till now, I’ve stayed out of the climate change arena because I don’t have anything resembling an appropriate background for dealing with the topic. But the problem I’ve found is that people on both sides of the argument don’t really give a crap about credentials or scientific rigor.

What they care about is simply “Did this guy end up on my side of the argument?” If he did, he is a real honest to goodness scientist. If he didn’t, he is a hack, a washed up old know-nothing, a dishonest tool for religious environmentalism or a shill for the oil companies (depending on which side you’re on).

The reason I’m skewering the pro-climate-change side in this visual is because they seem to be much narrower in their orthodoxy. I’ve known extremely liberal people with graduate degrees in nuclear physics who get angry because no one wants to hear their solution to climate change (hint: it rhymes with buclear flower pants) due to the fact that the movement (from a political stand point) is dominated by long time environmentalists who spent their formative years fighting against nuclear power and don’t want to admit that they might have gotten that one wrong. (How is that for a run-on sentence?)

The “climate skeptics” side is often just as scientifically lackadaisical, but they’ll welcome anyone with open arms as long as they’re even remotely skeptical of any part of the political climate change agenda. They’ll accept anything including “climate change isn’t happening”, “man isn’t causing climate change”, “climate change isn’t a problem”, or (my favorite) “the solution isn’t (Kyoto/carbon tax/ethanol/hybrid car/whatever-my-political-enemies-like), the real solution is (fill-in-the-blanks-with-something-that-will-make-my-political-enemies-angry)”.

But, ultimately, I find the pro side to be more humorous because it is populated by approximately 30 actual scientists with knowledge in the field and millions of people with no scientific knowledge in the field who just like to feel smug about being all “scientific” while bashing other people who aren’t “scientific”.

And how do they determine who falls into which category? See the chart above.

Jumping Into Visualization Without the Math

October 16, 2009

I found this link from Instapundit, so credit where it is due.

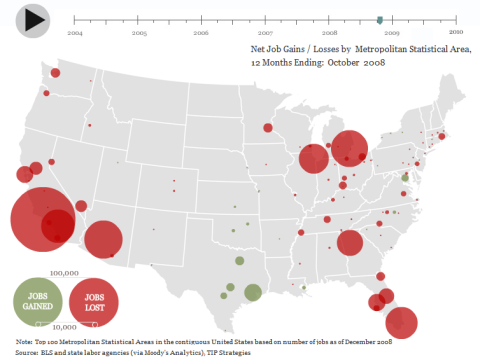

You may have seen this visual of job loss across the country. It maps the job gains and losses in major metro areas across the country and, on the surface it seems pretty cool. Here’s October 2008.

As someone who really loves information visualization, I applaud the effort. But it’s wrong.

Let’s take a quick look at the legend. See if you can spot the problem.

Keen readers will notice the problem… whoever created this visual scaled only the diameter of the circle. The problem with this is what we can see below.

Here I took the “10,000” circle and duplicated it over 50 times within the “100,000” circle. If this visual were an accurate one, we would multiply the 10,000 circle ten times to get 100,000. That’s just the way these things should work.

Math Time! (skip if you don’t care)

The area of a circle is calculated with the equation:

Which means that when they increase the height of the circle by 10, they increase it’s area by 100. This means that instead of the numbers increasing the way they should, the small numbers end up looking REALLY small and the big ones look absurdly huge.

End of Math Time

I’m not trying to be an a**hole here. The idea behind the visual was a good one. But these things really do need to be accurate. Most people don’t know how to tell when a visual is in error and they end up with an incorrect impression from a poorly built infographic.

Space Junk And Visual Lies

October 14, 2009

A little while back, due to a collision between a dead Russian military satellite and a US commercial satellite, there was some noise about space junk because of the potential danger it posed to the International Space Stations and the Shuttle. The image that of space junk that became the icon of the problem is this image (click to enlarge):

I hate this image. Passionately.

The reason I hate this image is because it is probably the biggest visual lie I’ve ever seen. In his book The Visual Display of Quantitative Information, Edward Tufte has a concept called the “Lie Factor”. The “Lie Factor” judges the extent to which the data and the visual are out of sync.

Nothing could be more out of sync with reality than this image. While it imagines the appropriate number of objects circling the earth, it completely misrepresents the scale of those objects.

Space is unimaginably huge. While there are thousands of objects circling the earth, they range in size from a volleyball to a small school bus. If you do the calculations, the objects in this image range in size from Delaware to Tennessee.

Math Time! (skip if you don’t care)

In this image the diameter of the earth is about 1950 pixels. The real diameter of the earth is about 8000 miles. That means that every pixel is a shade over 4 miles.

The smallest piece of space junk in this image is about 10 pixels wide and 18 pixels tall and the largest one is about 24 pixels wide and 104 pixels tall. That gives the small objects an area of about 3000 square miles (about 30% larger than Delaware) and the large ones an area of 41,000 square miles (a shade smaller than Tennessee).

End of Math Time

To give an example of this exaggeration, let’s look at Angelina Jolie. (How’s that for a non sequitur?) Jolie has a freckle (beauty mark, mole, whatever) above her right eye.

Let’s say we’re concerned about people getting skin cancer, so we want to make a shocking graphic that we hope will help people remember to monitor skin markings for signs of melanoma. If we lied visually as much as the space junk photo, we would change a picture of Angelina Jolie from:

to

Imagine the Photoshop is done a shade better than I can do. The intention to do good and get people to realize the severity of melanoma is all well and good, but it doesn’t justify lying to people.

Granted, the space junk image holds the disclaimer that it is “an artists impression”. But that isn’t how people read these kinds of things and anyone who believes otherwise is, quite frankly, lying to themselves about the realities of human perception and belief. People see these images and they expect that they match reality in some way. Do a search for “space junk” to find out how many otherwise intelligent people have accepted this image as reality without a breath to admit how inaccurate it is.

This is not to say space junk isn’t a problem. I would have “solved” the problem of visual representation by portraying the space junk as a dot. A single pixel that can clearly indicate position instead of pretending to be a representation of size. Then, I would explain that, even though these objects are very tiny compared to the size of the space they’re in, this junk moves at thousands of miles an hour… making very small objects insanely dangerous.

You could effectively compare it to shooting a bullet into the air. A tiny piece of metal in a huge space can be really dangerous. People get that. There is no reason to portray the bullet as a 747.

I’m worried that even scientific people either didn’t recognize this problem or didn’t feel the need to speak up about it. Even people experienced in infographics didn’t say anything (see here, and here). (Side Note: I take particular pleasure is smacking down Wired magazine for putting up this graphic without even mentioning that it is an “artist rendering”. As a whole, they tend to be smug and irritating in the extent to which they dismiss anyone without technical or scientific expertise. Here they reveal that they are just as susceptible to junk science as the average Joe.)

There is an extent to which many people in scientific and technical journalism are content to give people the appropriate impression (“Space junk is a dangerous problem”) without providing them with the appropriate information. Or, to put the problem simply, they think the end justifies the means.

I take the view that truth in data is the highest importance. I’m frustrated in how lonely it is out here on my high ground.